If you’re reading this then you’ve probably either just finished building your website, still in the process of building your website, or just plain thinking about an idea for a website. And good for you! You’ve come to the right place because after all that hard work building (or thinking about building, because we all know that this alone can cause nervous sweats) your site, you want to make sure people can find your site.

That’s where search engine optimization comes in, and if you’ve ever seen any movie ever made, you’d recognize that the good guy typically wears white and the bad guy will usually dawn some darker, or even black clothing. Does this villain look familiar?

And that’s where the name ‘Black Hat SEO’ comes from: it describes all the techniques which you want to avoid when it comes to search engine optimization, whereas ‘White Hat SEO’ is the umbrella term to label all of the things that you should be doing in order to effectively optimize your site. Now, since one of my favourite characters is the all-too-famous Wicked Witch of the West, I’m going to focus on explaining techniques that will keep you out of the Land of Oz and in the good graces of Google. So let’s get you back to Kansas!

Repeated Keywords

Think it’s a good idea to constantly repeat the same keyword over and over again on the same page? Think again! One of the things Google has developed over the years (besides an answer to the age-old debate about who would win a fight between Batman and Superman) is a brain, and its become extremely good at realizing when websites are filled with nonsensical copy in order to achieve a prime ranking. It also applies to more than just your webpage, including things like the meta-description and ALT-tags for your content. Stick with a focus keyword per page, use it sparingly (but intelligently), and try to include it in the URL of the specific page you’re trying to get ranked.

White Text

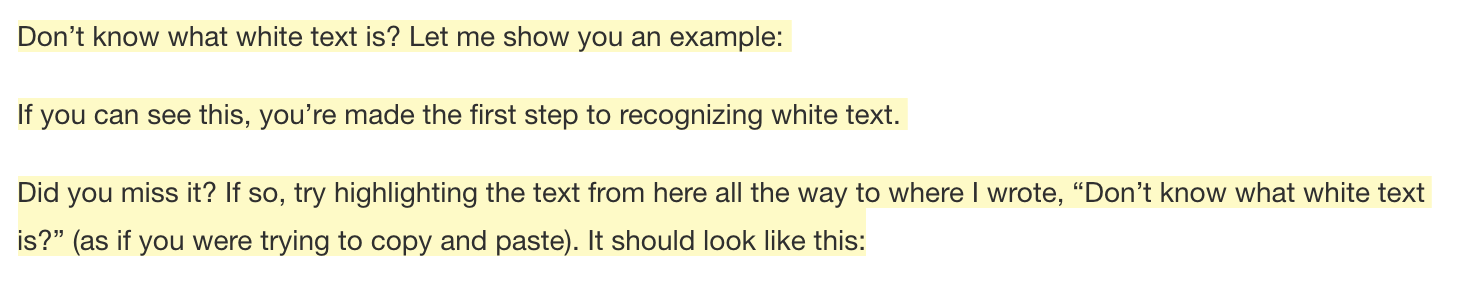

Don’t know what white text is? Let me show you an example:

If you can see this, you’ve made the first step to recognizing white text.

Did you miss it? If so, try highlighting the text from here all the way to where I wrote, “Don’t know what white text is?” (as if you were trying to copy and paste). It should look like this:

You’ve found it! And hopefully you’ve realized that it’s called ‘white text’ because the font colour of the text has been changed to match the background colour of the webpage (which is typically white on sites that follow traditional best practices). This makes the text invisible to the naked eye but readable by search engines, and Google’s big brain of theirs has caught on to webmasters using this technique. They will punish your search engine reputation, so whatever you do to make your website optimized, don’t use this method. Period. End of story.

But before we really end there, we have more story.

Invisible Links and Phantom Pixels

These are a little more complicated to explain: basically, invisible links are links to other webpages (usually those with a high PageRank) that are either intentionally hidden through the use of code or have been linked to a single character on the page, such as the period at the end of the sentence. ← If you click on that “.”, you’ll be directed to an Instagram account for Cold Tea, a local Toronto bar that prides itself on being extremely difficult to find (so much so that they don’t even have a website). They also don’t sell cold tea.

Another method of using invisible links (remember when I said they could use code?) is using CSS to intentionally hide the text from the page. This can also work to fill your page with repeated keywords, and it looks something like this:

<div style=“visibility:hidden;”> www.anygivenwebsite.com </div>

<div style=“visibility:hidden;”> keyword keyword keyword keyword keyword </div>

Phantom pixels work very similarly to hiding a link within a single character but, instead of disguising the link within the page text, it is hidden within a 1×1 pixel image. These would be nearly impossible to actually click on, but the source code of your page would still contain the pertinent information that Google would inevitably read and process while ranking your site. Again, this technique can also be used to hide keywords within the image’s ALT-tags, but how many times do I have to tell you? Don’t do it!

Mirror Sites

Now this is a touchy subject. While mirror sites do have a legitimate purpose in the wide world of the web, they need to be used properly in order not to be punished by Google. So you might be asking yourself, ‘What are the things I need to keep in mind when it comes to mirror sites, and what companies can benefit from them?’

Mirror sites are beneficial to organizations that operate internationally. So, while American users get directed towards Google.com, we Canadians are directed to Google.ca. But one major thing to keep in mind is that the difference here is what’s known as the country-code top-level domain (ccTLD, or the .com and .ca that you see at the end of a URL), and what that mouthful means is that these sites operate in different geographical sections of the world. Google doesn’t treat this as duplicate content because each website is appropriate to a specific country, but if Canadians were directed to a site that has the same ccTLD (say, for example, we were directed to Googler.com when trying to reach Google.com) as the originally requested site, you’re going to run into problems.

Now there are some issues with this. Say you have a website that operates in the United States and England: you will have domains for both www.yoursite.com and www.yoursite.co.uk. Both sites are exactly the same, except for the contact information such as a phone number or address. In some cases, Google will still perceive this as duplicate content, and the best way to avoid this issue is by tailoring the content on each site to the specific regional location.

But you’re probably thinking, ‘Hey, Google.com looks the same as Google.ca, why can they get away with having duplicate content when others can’t?’ And, aside from the fact that Google is Google and controls the way things appear on Google, it’s because they’re a search engine and the content (or the links that appear in the search query) will change depending on the ccTLD. So, for example, if you were to search ‘tacos’ on both www.google.com and www.google.ca, the search results will be different and the links displayed will be more appropriate to where the user is searching from. So even though the search engine stays the same, the content will change dramatically whether you’re searching for tacos in Toronto or St. Louis. Now I want tacos…

Auto Refreshes

Have you ever been on a website, when all of a sudden the page automatically refreshes itself? This method was used in order to create the perception that a website was getting a lot more traffic than it really was, which of course would increase your rank in search engines. In the real world, it would be like an amusement park only having one guest, but the administration of the park counts the number of guests every five minutes and adds them up. There was really only one person at the park all day, but now their statistics show that 60 people were there. And it isn’t hard for Google to figure this out by looking at specific IP addresses. You would have better luck actually spending an entire day at Disney World alone than sneaking a fast one past Google in this area. But wouldn’t it be fun to have the Magic Kingdom all to yourself?

To learn about more techniques like SEO that can be used to organically draw traffic to your site, check out our white paper on Inbound Marketing. I promise you won’t be disappointed. Pinky promise.